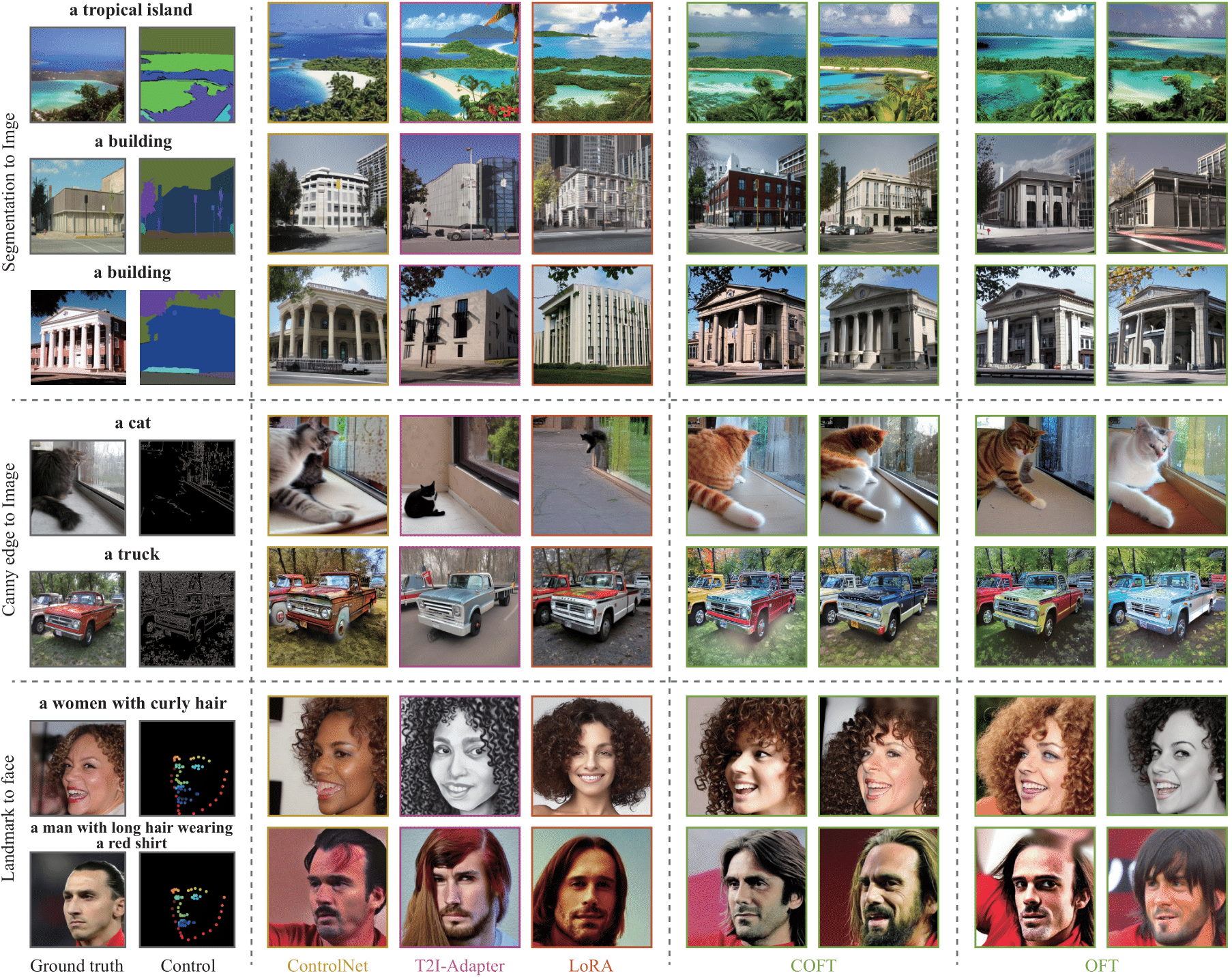

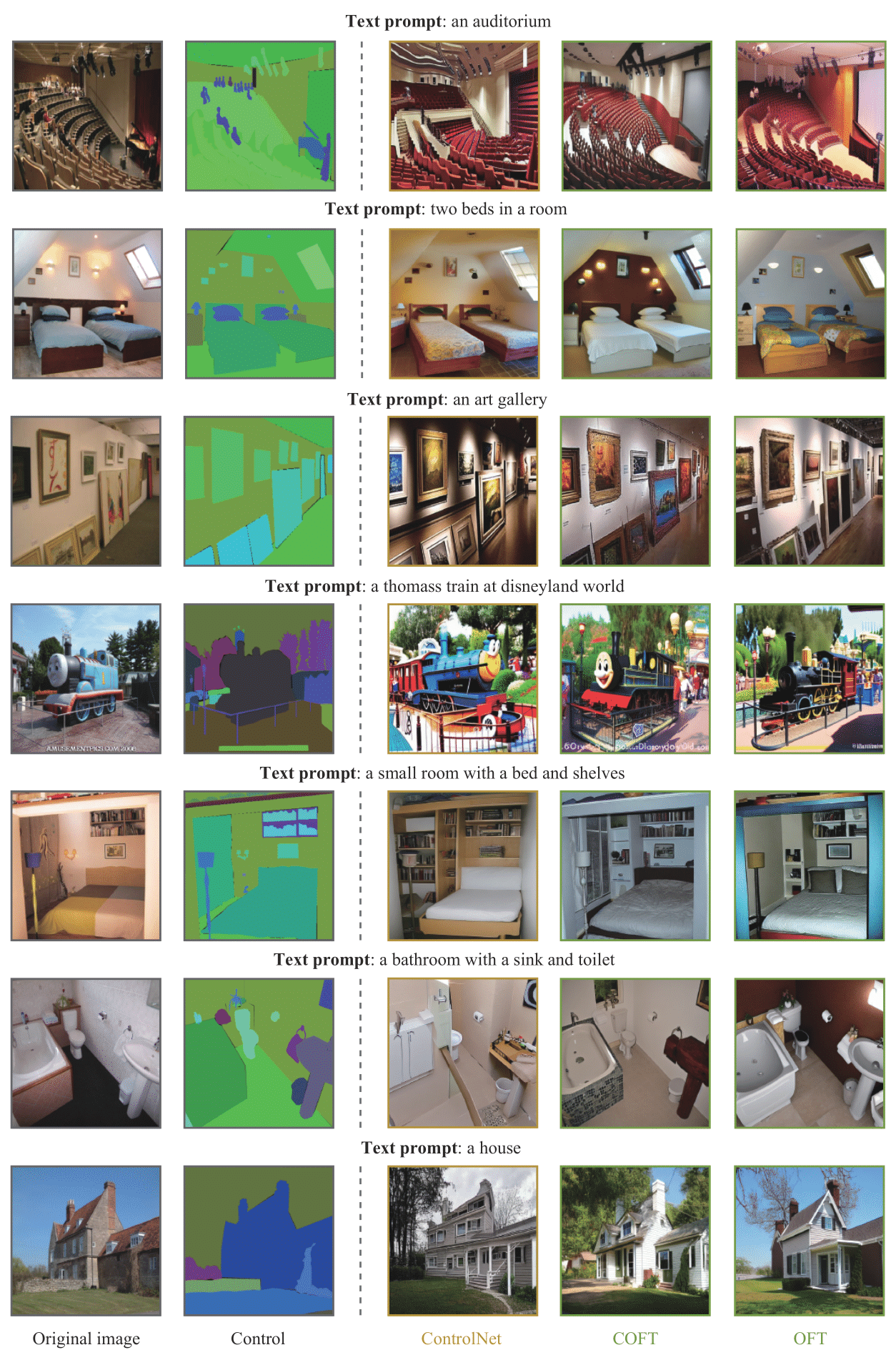

OFT can effectively and stably finetune text-to-image diffusion models.

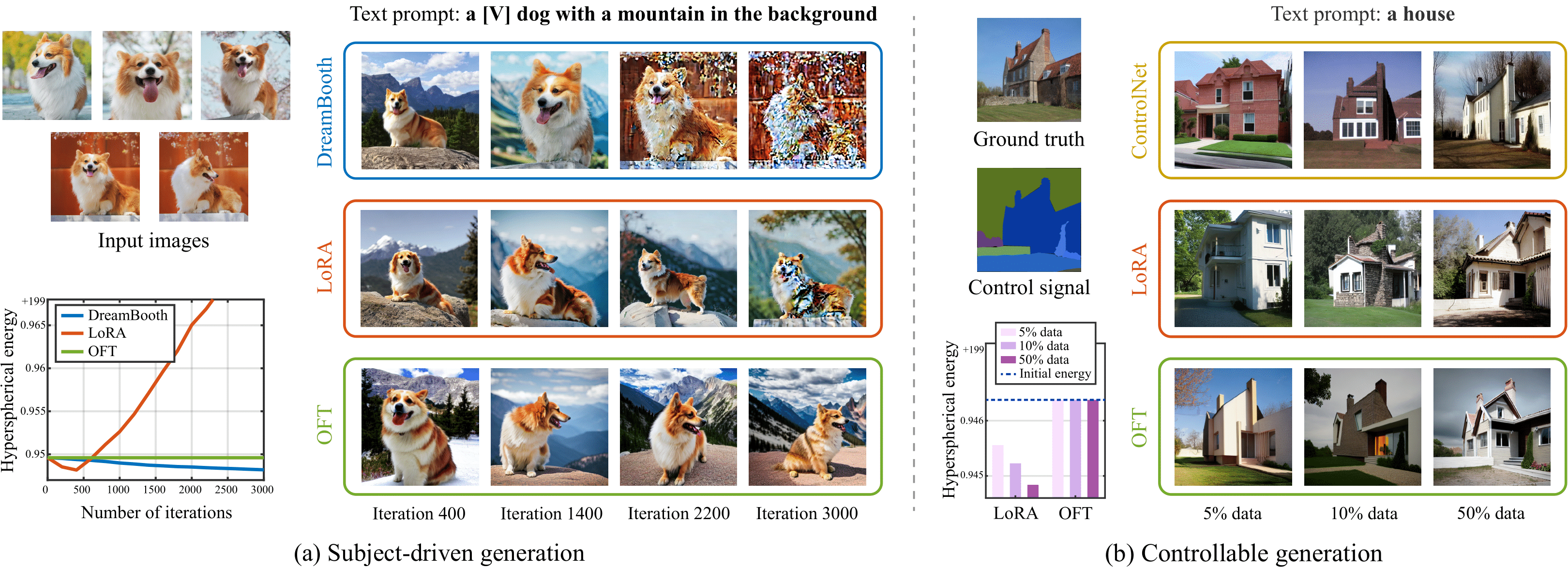

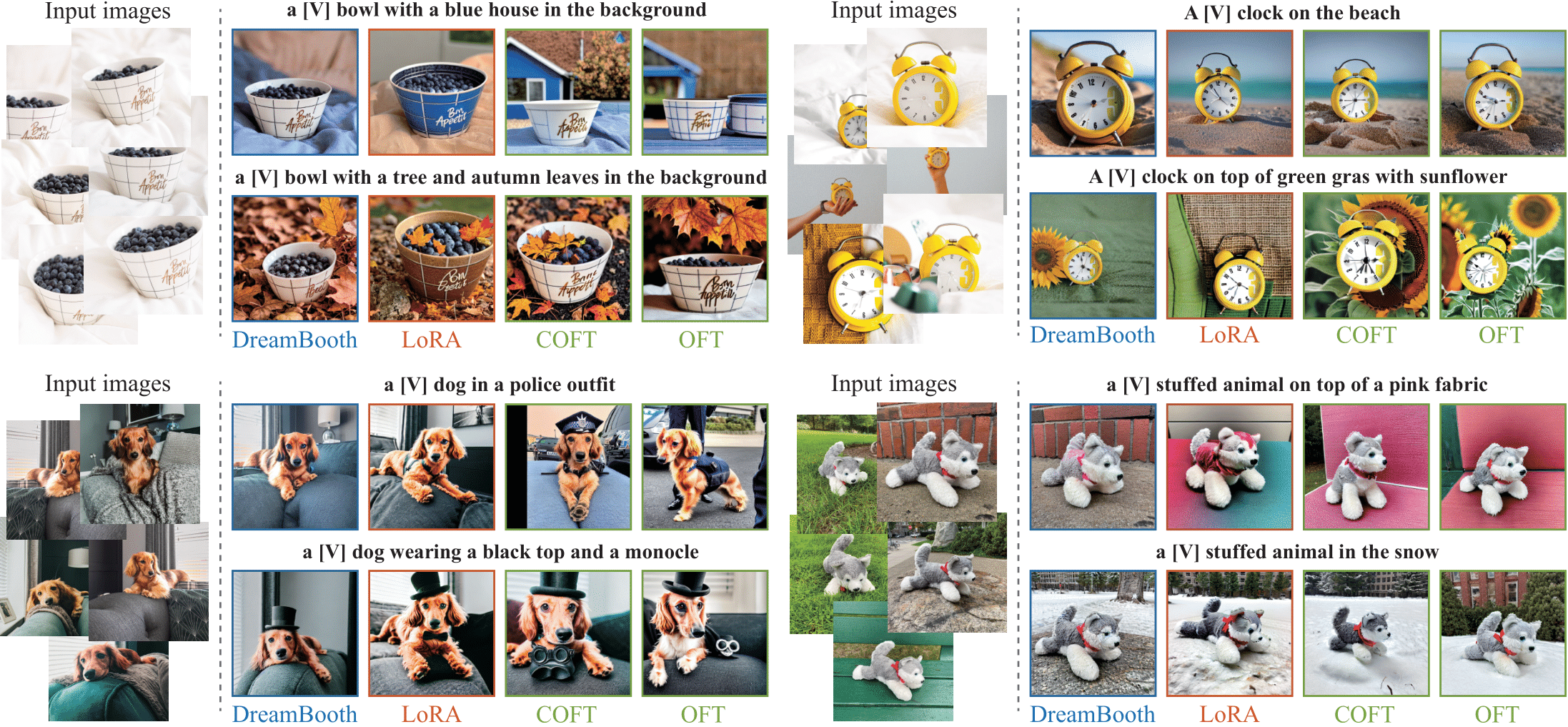

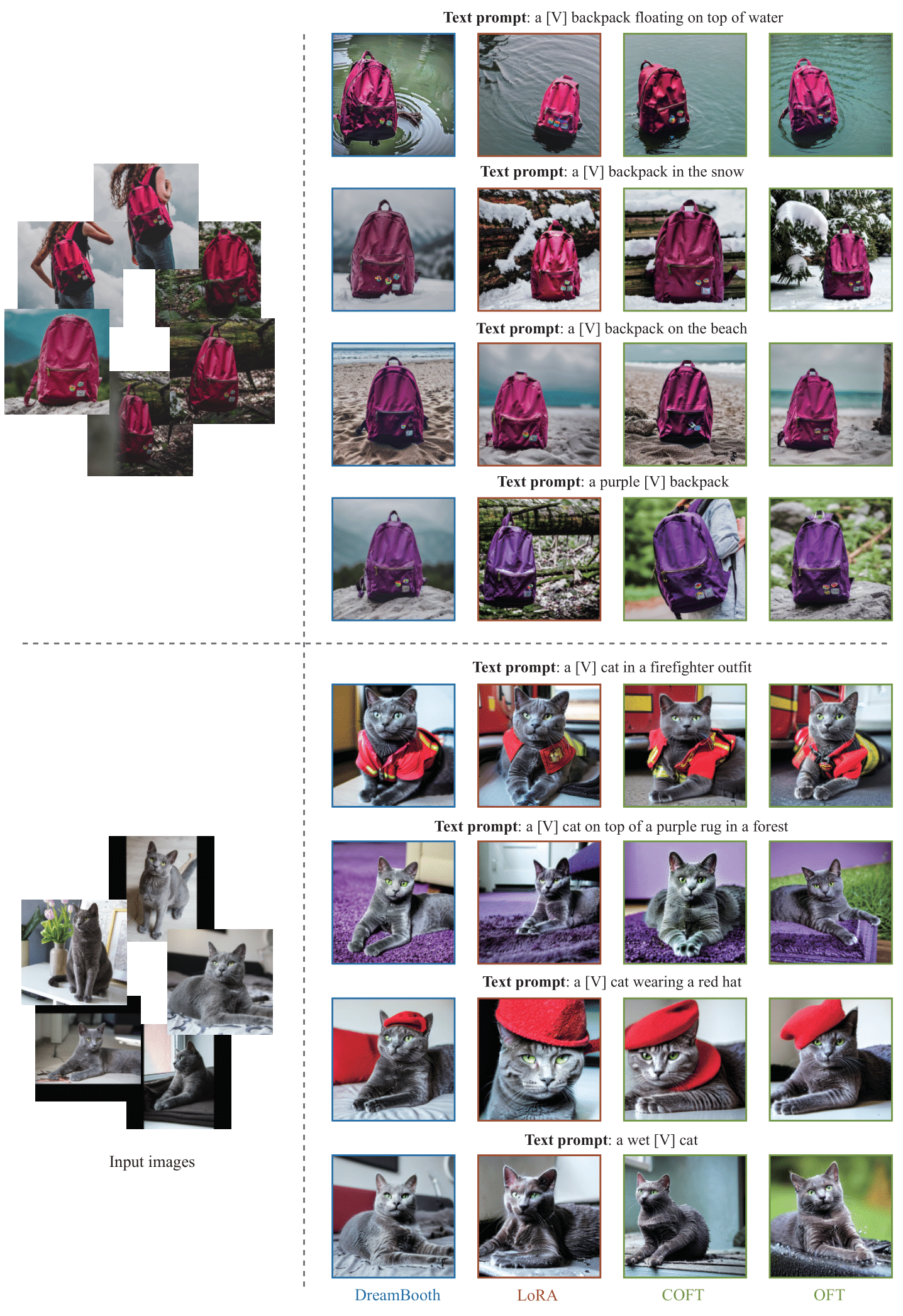

Large text-to-image diffusion models have impressive capabilities in generating photorealistic images from text prompts. How to effectively guide or control these powerful models to perform different downstream tasks becomes an important open problem. To tackle this challenge, we introduce a principled finetuning method -- Orthogonal Finetuning (OFT), for adapting text-to-image diffusion models to downstream tasks. Unlike existing methods, OFT can provably preserve hyperspherical energy which characterizes the pairwise neuron relationship on the unit hypersphere. We find that this property is crucial for preserving the semantic generation ability of text-to-image diffusion models. To improve finetuning stability, we further propose Constrained Orthogonal Finetuning (COFT) which imposes an additional radius constraint to the hypersphere.

Specifically, we consider two important finetuning text-to-image tasks: subject-driven generation where the goal is to generate subject-specific images given a few images of a subject and a text prompt, and controllable generation where the goal is to enable the model to take in additional control signals. We empirically show that our OFT framework outperforms existing methods in generation quality and convergence speed.

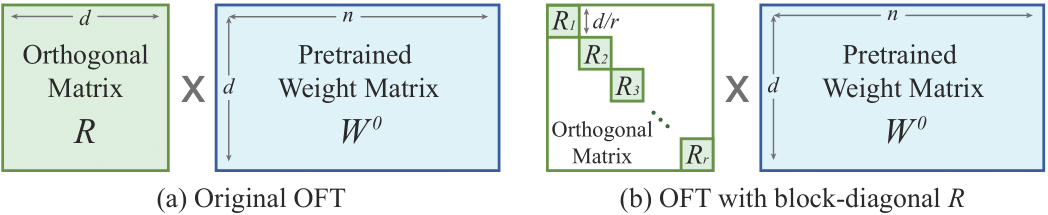

The basic idea is to finetune the pretrained weight matrices with orthogonal transform. More specifically, we transform all the neurons in the same layer with one orthogonal matrix, such that the relative angle between any pairwise neurons stays unchanged. Such a property can perfectly preserve the hyperspherical energy among the neurons. The preservation of hyperspherical energy can effectively prevent model collapse.

@InProceedings{Qiu2023OFT,

title = {Controlling Text-to-Image Diffusion by Orthogonal Finetuning},

author = {Qiu, Zeju and Liu, Weiyang and Feng, Haiwen and Xue, Yuxuan and Feng, Yao and Liu, Zhen and Zhang, Dan and Weller, Adrian and Sch{\"o}lkopf, Bernhard},

booktitle = {NeurIPS},

year = {2023}

}